AIMS

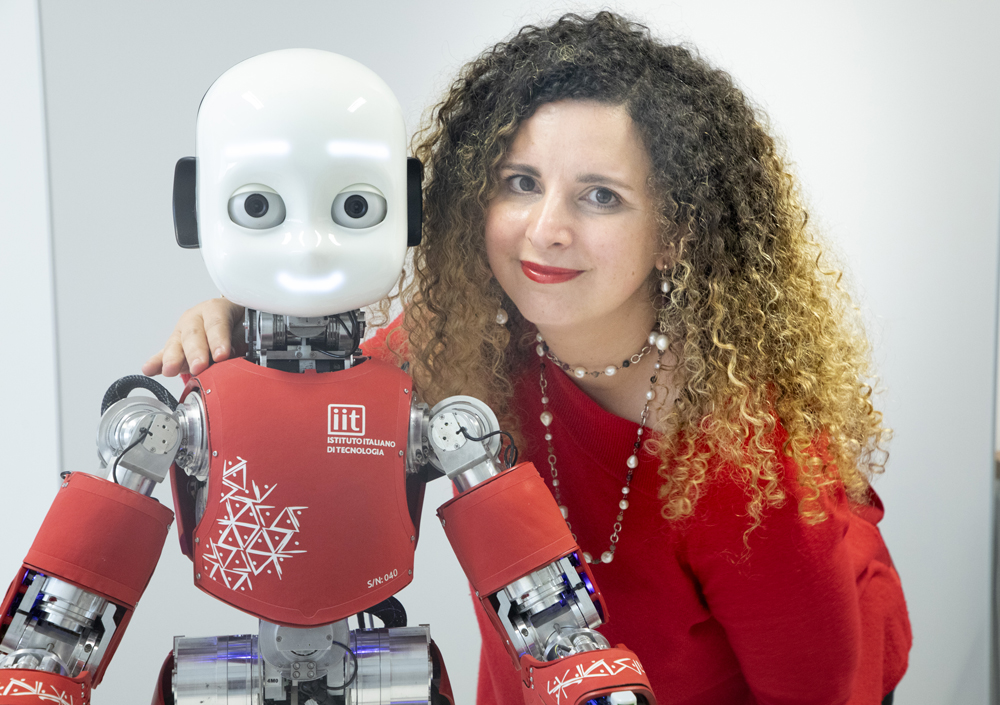

The wHiSPER project (investigating Human Shared PErception with Robots) comes from the idea of Alessandra Sciutti to study the development of perception during social interaction within the COgNitive Architecture for Collaborative Technologies (CONTACT) Unit of IIT.

The approach of the unit is human-centric, trying to study both Human-Human and Human-Robot Interaction also from an interdisciplinary point of view. In this respect, implementing robots is not the only aim of the unit: they are both used as tools for quantitative analysis of human interaction and to increase the readiness and effectiveness of human-robot collaboration. A better understanding of the bases of interaction and the realization of more intuitive and adaptive robots will facilitate the adoption of collaborative technologies, in particular by the weak components of our society who might benefit more of their use.

Towards a social idea of perception

The project moves from two different suggestions. On the one hand, our perception of the world depends both on the incoming sensory information, and on our prior knowledge. Even the judgment of the duration of an interval of time or of the length of a segment is influenced by our previous experience and by our expectations. On the other hand, our perspective on the world is not static and individualistic at all: it might change during social interaction, where two agents dynamically influence each other, often achieving a high level of coordination.

Starting there, wHiSPER proposes to study for the first time how basic mechanisms of spatial and temporal perceptual inference are modified during interaction, by moving the investigation from an individual to an interactive shared context.

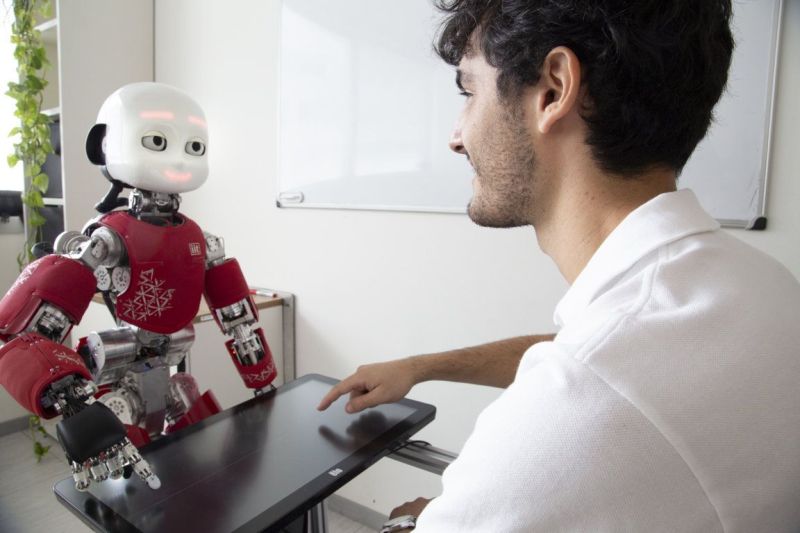

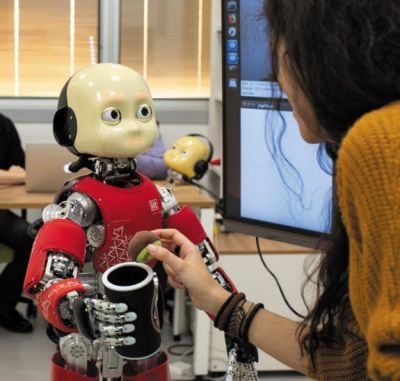

To allow for scrupulous and systematic control, wHiSPER uses a humanoid robot (iCub) as an interactive agent, serving as investigation probe, whose perceptual and motor decision are fully under experimenter’s control.

Our five main objectives

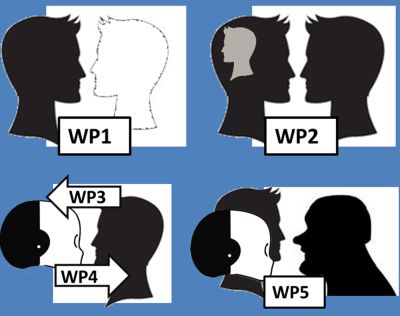

-

WP1: determine how being involved in an interactive context influences perceptual inference

We demonstrated for the first time that visual perception of space changes during interaction with a robotic agent, as far as it is perceived as a social being (Mazzola et al. HRI 2020, honorable mention)

-

WP2: assess how perceptual priors generalize to the observation of other’s actions

We have assessed how the style of an action impacts on the observers’ perception and response (Lombardi et al. Sci Rep 2021). These results confirm that our motor models act as priors also for action style, influencing the processing of others’ motions properties

-

WP3: understand whether and how individual perception aligns to others’ priors

We identified significant differences among the social influence of computers, robots, and humans (Zonca et al. Sci. Rep 2021, Int. Jour. Soc. Rob. 2023). These findings shed light on the mechanisms regulating learning and advice-taking in human-robot interaction when a difference exists in human and robot perceptions.

-

WP4: assess how is it possible to enable shared perception with a robot

We developed algorithms to read facial expressions (Barros et al. SN Comp. Sci. 2020, IEEE Access 2022) and cognitive load (Pasquali et al. HRI 2021) and simple architectures for social adaptation, with a focus on internal motivation systems supporting autonomous decision-making and personalization (Tanevska et al. Front. Rob. AI 2020).

-

WP5: determine whether perceptual inference during interaction is modified with aging

We observed that, despite a significant reduction in temporal perceptual acuity already in the early phases of aging, the inferential process is preserved (Incao et al. 2022 Front Rob AI).

WHiSPER will exploit rigorous psychophysical methods, Bayesian modeling and human-robot interaction, by adapting well-established paradigms in the study of visual perception to a novel interactive context.

According to this, in several experiments the humanoid robot and the participants will be shown simple temporal or spatial perceptual stimuli that they will have to perceive either to reproduce them or to perform a coordinated joint action (as passing an object). The measures of the reproduced intervals and of the kinematics of the actions will allow to quantify through Bayesian modeling how social interaction influences visual perception.