Workshop

Our perception of the world, in particular, the ones which are influenced by affective understanding, depends both on sensory perception and prior knowledge. Most of the current research on modeling affective behavior as computer models ground their contribution to pre-trained learning models, which are purely data-driven, or on reproducing existing human behavior. Such approaches allow for easily reproducible solutions that fail when applied to complex social scenarios.

Understanding shared perception as part of the affective processing mechanisms will allow us to tackle this problem and to provide the next step towards a real-world affective computing system. The goal of this workshop is to present and discuss new findings, theories, systems, and trends in computational models of affective shared perception. The workshop will feature a multidisciplinary list of invited speakers with experience in different aspects of social interaction, which will allow a rich and diverse debate about our overarching theme of affective shared perception.

Program

The workshop is scheduled to happen on the 30th of October of 2020. It will start at 11h00 UTC-4 (16h00 CET) and it will have a total duration of 3h30min.

The participation is free, and a Zoom room will be available shortly before the workshop starts. Follows the current program:

- Welcome by the Organizers (5min)

- Invited Speaker: Yukie Nagai (30min)

- Shared Perception & Empathy Live Stream session I + QA (15 min)

- Shared Perception & Empathy Live Stream session II + QA (15 min)

- Break (10 min)

- Invited Speaker: Ellen Souza (30min)

- Methods for Shared Perception Live Stream session I + Q&A (25min)

- Methods for Shared Perception Live Stream session II + Q&A (15 min)

- Break (10 min)

- Invited Speaker: Ginevra Castellano (30min)

Accepted Contributions

We had a total of 13 abstracts accepted in the workshop.

All the presentations are available on our youtube channel playlist:

The abstracts are separated into two presentation sessions:

Shared Perception & Empathy

Emma Hughson, Roya Javadi, Angelica Lim. Investigating the Role of Culture on Negative Emotional Expressions in the Wild.

Janderson Ferreira, Agostinho A. F. Júnior, Yves M. Galvão, Bruno J. T. Fernandes. Empathy Path Planning.

Leandro H. de S. Silva, Letícia Portela, Nathália R. C. da Silva, Agostinho A. F. Júnior, Bruno J. T. Fernandes. Non-verbal communication empathy assessment using extended OMG-Empathy dataset.

Justin Heer, Jie Yan, Angelica Lim. Joyful or Nervous? A Dataset of Awkward, Embarrassed and Uncomfortable Smiles.

Nikoletta Xirakia, Tayfun Alpay, Pablo Barros, Stefan Wermter. Multimodal Integration for Empathy Recognition.

Matthias Kerzel and Stefan Wermter. Towards a Data Generation Framework for Affective Shared Perception and Social Cue Learning Using Virtual Avatars?

Methods for Shared Perception

Xiaojing Xu, Virginia R. de Sa. Pain Evaluation in Video using Extended Multitask Learning from Multidimensional Measurements.

Kumar T. Rajamani, Srividya Rajamani. An Enhanced Speech Emotion Recognition System using Attention Network.

Marco Matarese, Francesco Rea and Alessandra Sciutti. Shared perception in mutual visual orienting: A comparative study.

Yuchou Chang. Human-Robot Shared Perception for Categorizing Object via Deep Canonical Correlation Analysis.

Bukowski H. Tik M., Silani G., Ruff C.C., Windischberger C., & Lamm C.. From shared to distinct affects via rTMS/fMRI combined: Neuroenhancement of self-other distinction in empathy depends on dispositional empathic understanding.

Stefano Rovetta, Zied Mnasri, Francesco Masulli, Alberto Cabri. Emotion detection from speech in dialog systems: advances and challenges.

Finn Rietz, Alexander Sutherland, Suna Bensch, Stefan Wermter, Thomas Hellstr. WoZ4U: An Open Source Wizard-of-Oz Interface for the Pepper robot.

Registration

The registration to the workshop is free, but in order to guarantee a place to attend the workshop, please register here.

Invited Speakers

Ginevra Castellano

Ginevra Castellano is a Professor in Intelligent Interactive Systems at the Department of Information Technology, Uppsala University, where she leads the Uppsala Social Robotics Lab. Her research interests are in the areas of social robotics and affective computing, and include social learning, personalized adaptive robots, multimodal behaviours and uncanny valley effect in robots and virtual agents. She has published more than 100 research papers on these topics, receiving over 3000 citations. She was the coordinator of the EU FP7 EMOTE (EMbOdied-perceptive Tutors for Empathy-based learning) project (2012–2016). She is the recipient of a Swedish Research Council starting grant (2016–2020) and PI for Uppsala University of the EU Horizon 2020 ANIMATAS (Advancing intuitive human-machine interaction with human-like social capabilities for education in schools; 2018-2021) project, the COIN (Co-adaptive human-robot interactive systems) project, funded by the Swedish Foundation for Strategic Research (2016–2021), and the project "The ethics and social consequences of AI & caring robots. Learning trust, empathy, and accountability" (2020-2024), supported by the Marianne and Marcus Wallenberg Foundation, Sweden. Castellano was a general co-chair at IVA 2017. She is an Associate Editor of Frontiers in Robotics and AI, Human-Robot Interaction section, and IEEE Transactions in Affective Computing. Castellano is a recipient of the 10-Year Technical Impact Award at the ACM International Conference on Multimodal Interaction 2019.

Talk Title: Investigating People’s Affective Responses to Robots

The recently published EU guidelines for trustworthy AI warn of the need to study

the social impact of AI systems integrated in all areas of our lives.

AI systems such as robots in socially assistive roles can impact people’s social relationships and attachment,

so one important area of investigation is the study of how people emotionally respond to robots.

One question that I address in my research is how a robot’s human-likeness in appearance and behaviour

influences people’s affective perceptions and affective responses to robots.

I present two studies investigating people’s affective perceptions and affective responses to robots displaying emotional and empathic behaviour.

I show that, in experiments with a mixed embodiment robot, human likeness seems to affect high and low level affective responses

in different ways. I also show that people are able to understand human-like and robot-specific empathic traits in a robot.

This work contributes to addressing a key question for ethical and trustworthy AI and robotics: when do people empathise and socially connect with robots?

Ellen Souza

Ellen Souza is a Professor at the Federal Rural University of Pernambuco, in Brazil and a postdoctoral researcher at the Institute of Mathematics and Computer Sciences – ICMC/USP. She has a PhD in Computer Science from the Federal University of Pernambuco. Her research interests include digital inclusion, socially assistive robotics, text mining, and artificial intelligence.

Talk Title: Assistive Robot for Training Emotional Facial Expressions to People with the Symptoms of Alexithymia.

Alexithymia is a disorder characterized by the inability to identify and describe emotions in the self and by the difficulty to distinguish and appreciate the emotions of others. Alexithymia is prevalent in approximately 10% of the general population and is known to be comorbid with a number of psychiatric conditions. It also affects more than 50% of the population with Autism Spectrum Disorder (ASD). A common treatment for this problem is the use of behavioral analysis, coupled with occupational and speech therapies. Therapists often concentrate on building a foundation of naming emotions and appreciating a range of feelings through games, drawings, and stories. In this talk, I’ll present a different approach to training emotional facial expressions to people with the symptoms of Alexithymia using assistive robots. The solution comprises an artificial intelligence that uses a Deep Neural Network to capture the face of the person and classify it into one of the six universal facial emotions. More than 65 robot-assisted therapy sessions were performed by a multidisciplinary team composed of a psychologist, an occupational therapist, and a speech therapist.

Yukie Nagai

Yukie Nagai is a Project Professor at the International Research Center for Neurointelligence, the University of Tokyo. She received her Ph.D. in Engineering from Osaka University in 2004 and worked as a Postdoc Researcher at the National Institute of Information and Communications Technology (NICT) and at Bielefeld University for five years. She then became a Specially Appointed Associate Professor at Osaka University in 2009 and a Senior Researcher at NICT in 2017. Since April 2019, she leads Cognitive Developmental Robotics Lab at the University of Tokyo.

Yukie Nagai has been investigating underlying neural mechanisms for social cognitive development by means of computational approaches. She designs neural network models for robots to learn to acquire cognitive functions such as self-other cognition, estimation of others’ intention and emotion, altruism, and so on. Her computational studies suggest that the theory of predictive coding provides a unified account for both the continuity and diversity of cognitive development.

Talk Title: Development of Perception and Learning Based on Predictive Coding

A theoretical framework called predictive coding has been attracting increasing attention in developmental robotics as well as in neuroscience. It suggests that the human brain works as a predictive machine that tries to minimize prediction errors. We have been investigating how children acquire the ability of predictive processing and what causes individual diversities in the development. This talk presents two studies that examined the development of perception and learning in representational drawing.

he first experiments employed a computational neural network based on predictive coding. Our experiment revealed that aberrant precisions in prior knowledge caused difficulties in perception and thus learning. Neural networks with hypo- or hyper-priors exhibited poor generalization ability or weak adaptability whereas networks with moderate priors successfully acquired drawing behavior. The second experiment analyzed children’s drawings to verify our findings from the computational study. Our results demonstrated that young children had undifferentiated prior representations and/or limited capability to adapt to input stimuli. The ability to draw target objects gradually improved with age. The immaturity observed in younger children was analogous to the difficulties caused by aberrant priors in neural networks. I discuss how this finding can be extended to account for affective perception based on predictive coding.

Call for contributions

Prospective participants in the workshop are invited to submit a contribution as an abstract with a maximum of 350 words.

Submissions must be in PDF following and must contain the abstract title, authors, and affiliations. Send your PDF manuscript indicating [WASP ICDL 2020] in the subject to:

pablo.alvesdebarros [at] iit.it

The abstracts will be peer-reviewed by experts from all over the world.

To encourage the integration with the local affective computing communities, we will allow student abstracts to be submitted in English, Spanish, and Portuguese.

Each accepted abstract will be presented as a 5 min video (in English!) that will be shared on the workshop's social media. During the workshop, all the videos will be streamed and the authors will have a joint live Q/A with the audience for 60minutes.

Participants can also opt-in for participating in our Frontiers Research Topic on Affective Shared Perception. The same abstract sent to the workshop can be sent as an abstract submission to the research topic. If you want to opt-in for the research topic, only English submissions will be accepted.

We are very happy to announce that accepted abstracts will have a publication fee discount when submitting a paper to our Research Topic!

Interested Topics:

The primary covered topics are (but not limited to):

- Affective perception and learning

- Affective modulation and decision making

- Developmental perspectives of shared perception

- Machine learning for shared perception

- Bio-inspired approaches for affective shared perception

- Affective processing for embodied and cognitive robots

- Multisensory modeling for conflict resolution in shared perception

- New psychological findings on shared perception

- Assistive aspects and applications of shared affective perception

Important dates:

Abstract submission deadline: 17th of October

Notification of acceptance: 20th of October

Frontiers Research Topic Abstract Deadline: 24th of October

Video submission: 26th of October

Frontiers Research Topic

We invite all the workshop participants, and interested persons, to submit to our Frontiers Research Topic on Affective Shared Perception.

We are interested in collecting interesting and exciting research from researchers on the areas of social cognition, affective computing, and human-robot interaction, including also, but not restricted to specialists in computer and cognitive science, psychologists, neuroscientists, and specialists in bio-inspired solutions. We envision that it will allow us to tackle the existing problems in this area and it will provide the next step towards a real-world affective computing system.

Accepted abstracts have the option to opt-in for the research topic during submission.

Organizers

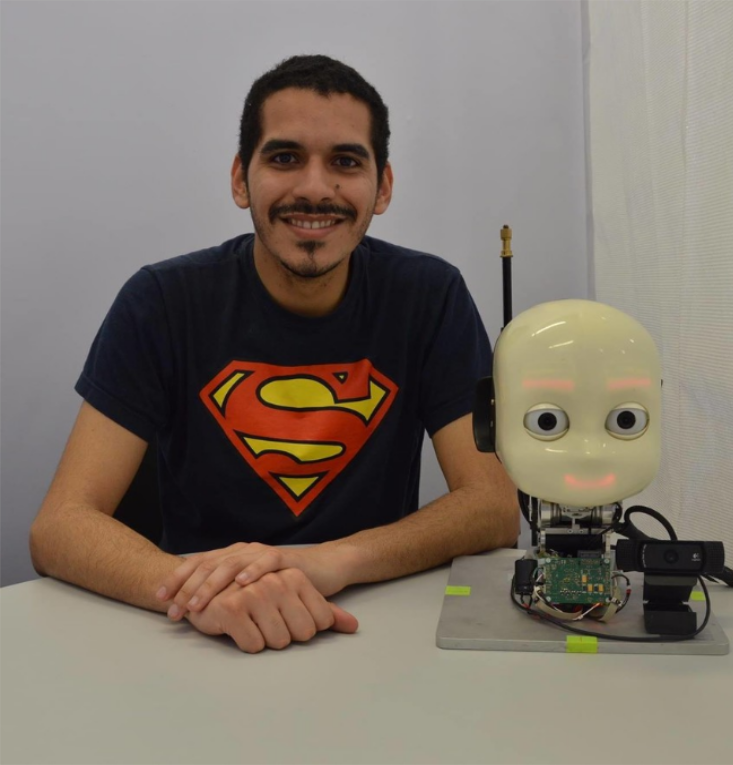

Pablo Barros

Pablo received his BSc in Information Systems from the Universidade Federal Rural de Pernambuco and his MSc in Computer Engineering from the Universidade de Pernambuco, both in Brazil. He received his Ph.D. in computer science from Universität Hamburg, Germany, and currently, he is a postdoctoral researcher at the Italian Institute of Technology in Genoa, Italy. His main research interests include deep learning and neurocognitive systems for emotional perception and representation, affect-based human-robot interaction and its application on social robots. He has been a guest editor of the journals "IEEE Transactions on Affective Computing", "Frontiers on Neurorobotics" and "Elsevier Cognitive Systems Research". He took part in the proposition and organization committee, as publication chair, of the Joint IEEE International Conference on Development and Learning and on Epigenetic Robotics 2020.

Alessandra Sciutti

Alessandra is the head of the COgNiTive Architecture for Collaborative Technologies (CONTACT) unit. In 2018 she has been awarded the ERC Starting Grant wHiSPER, focused on the investigation of joint perception between humans and robots. She published more than 60 papers and abstracts and participated in the coordination of the CODEFROR European IRSES project. She is an Associate Editor of Robots and Autonomous Systems, Cognitive Systems Research and the International Journal of Humanoid Robotics and she has served as a member of the Program Committee for the International Conference on Human-Agent Interaction and IEEE International conference on Development and Learning and Epigenetic Robotics. The scientific aim of her research is to investigate the sensory and motor mechanisms underlying mutual understanding in human-human and human-robot interaction.

The workshop is organized in the framework of the Starting Grant wHiSPER (G.A. No 804388) funded by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme.

The workshop was sponsored and supported by the Frontiers in Integrative Neuroscience journal.